AI christmas came early because Stable Diffusion 2.0 is out — and the feature I’m most excited about is depth2img. Inferring a depth map to maintain structural coherence will be pretty sweet for all sorts of #img2img use cases. Let’s explore why.

Why Depth Aware Image-to-Image Matters

With current image-to-image workflows, the image pixels and text prompts only tells the AI model so much — so no matter how you tweak the parameters, there’s a good chance that the output will deviate quite a bit from the input image, especially in terms of geometric structure.

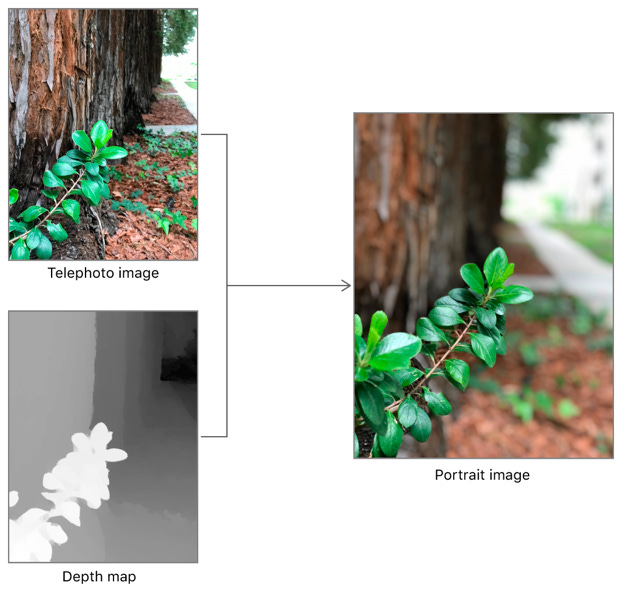

Instead, we can get a much better result by guiding the image generation process using a depth map — which coarsely represents the 3D structure of the human face in the example above. A depth map is used under the hood by your smartphone to give you that nice bokeh blur by separating you from the background, and even to relight your face while respecting it’s contours.

So how do we generate a depth map? Well, Stable Diffusion uses MiDaS for monocular depth estimation in their Depth2Image feature. MiDAS is a state-of-the-art model created by Intel and ETH Zurich researchers that can infer depth using a single 2D photo as an input.

What else can we do with depth2image? While this type of “approximate” depth is a good start, I suspect we’ll quickly see a Blender plug-in that plumbs in a far more accurate z-depth pass for 3D img2img fun. Since 3D software is already dimensional (duh!), generating such a synthetic depth map is trivial, and already used extensively in VFX workflows.

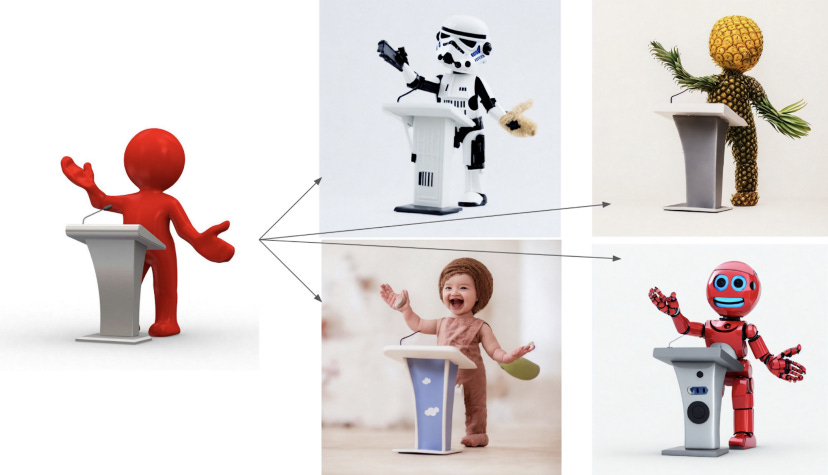

What this means is, artists can quickly “greybox” a 3D scene — focusing on the spatial 3D layout, versus the textures, lighting and shading, and immediately explore a multitude of directions with generative AI, before committing to implementing one “for real.” Such depth-aware img2img workflows will save countless hours in 3D world building and concept art. Check out this example below to imagine what’s in store:

What else? I demand more! Of course, given my particular set of passions what I wanna do is plumb in metric accurate depth from a photogrammetry or LiDAR scan, or even a NeRF (neural radiance field) to take these “reskinning reality” experiments I’ve been loving to the next level… unless someone else beats me to it, which would be pretty cool :)

Update: Instead, the creative CoffeeVectors@ made an amazing example taking a generic 3D character walk cycle animation and “upleveling” it to photorealistic quality — look at that hair and facial lighting! A little jitter, but nothing the more temporally coherent models of the future won’t be able to fix.

The velocity of these innovations cannot be overstated. Just 4 years ago in 2018 at Google, using multi-view stereo to generate depth maps for VFX felt cutting edge. Style transfer was the bleeding edge. But creators needed a fancy 360 camera and deep technical know how… now all they need is a phone to capture and a browser to create. Exciting times indeed!

Enjoyed this write up? Consider recommending it to your fellow creatives and technologists. You can also hit me up on your favorite platform where I share informational and inspirational goodness: https://beacons.ai/billyfx

Gosh! This stuff is developing at a pace I couldn’t have visualised even in my wildest dreams.